Winston

Lorenzo von Matterhorn

- Joined

- Jan 31, 2009

- Messages

- 9,560

- Reaction score

- 1,749

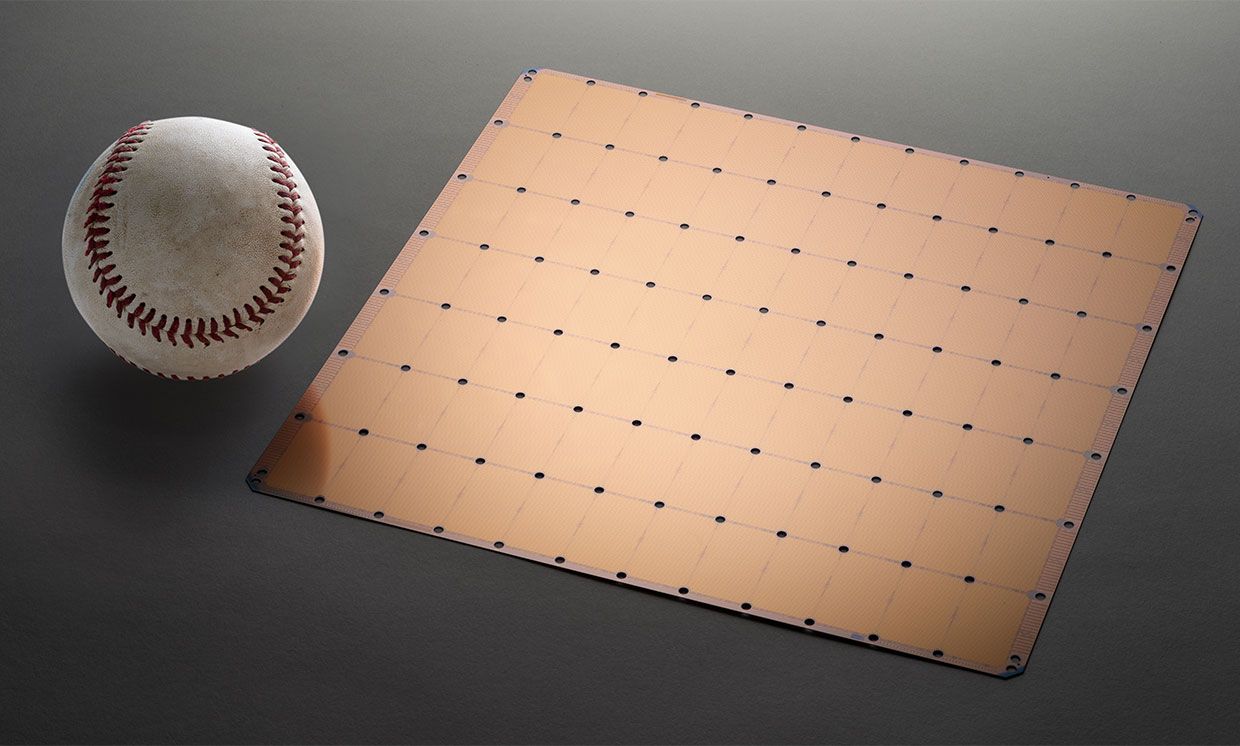

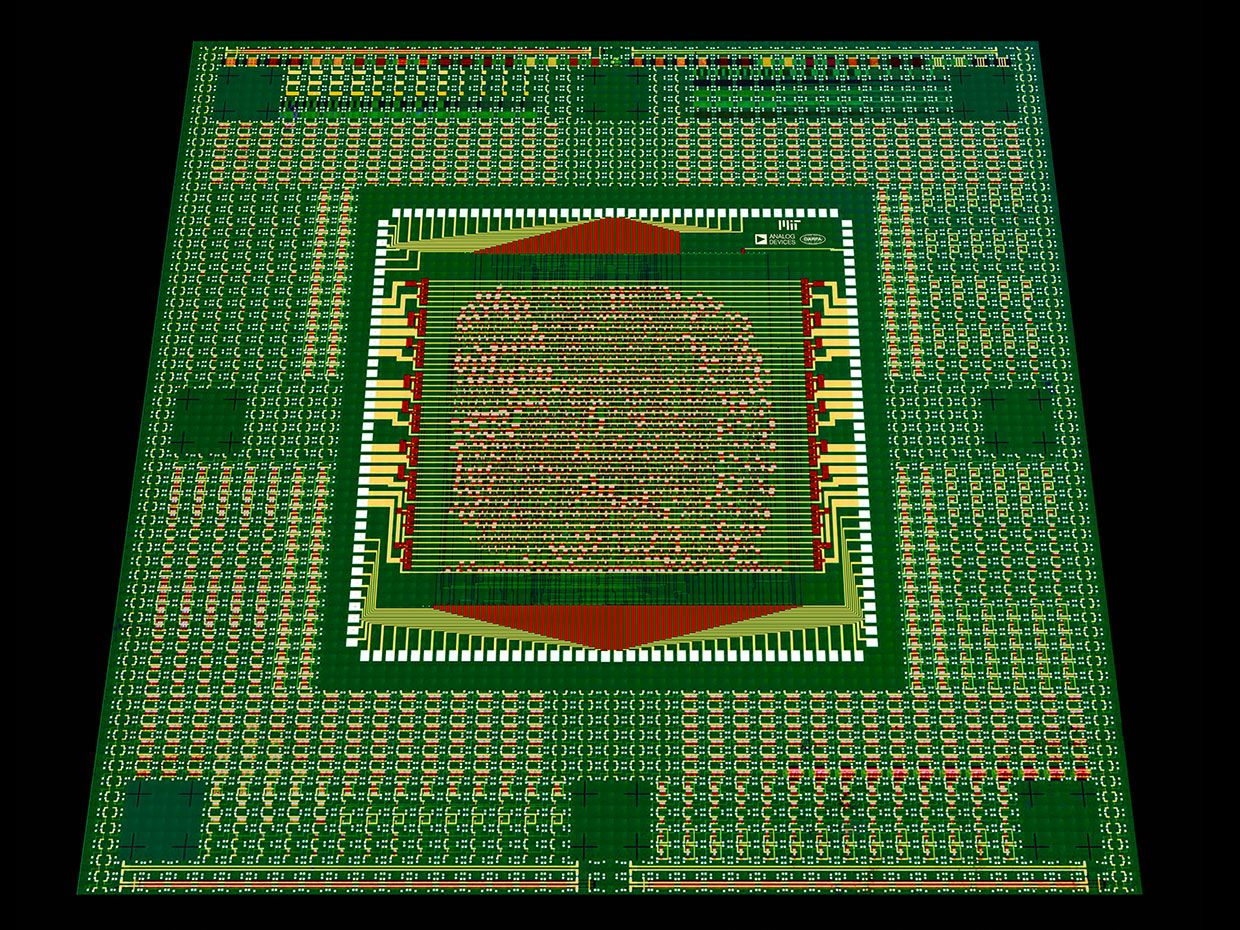

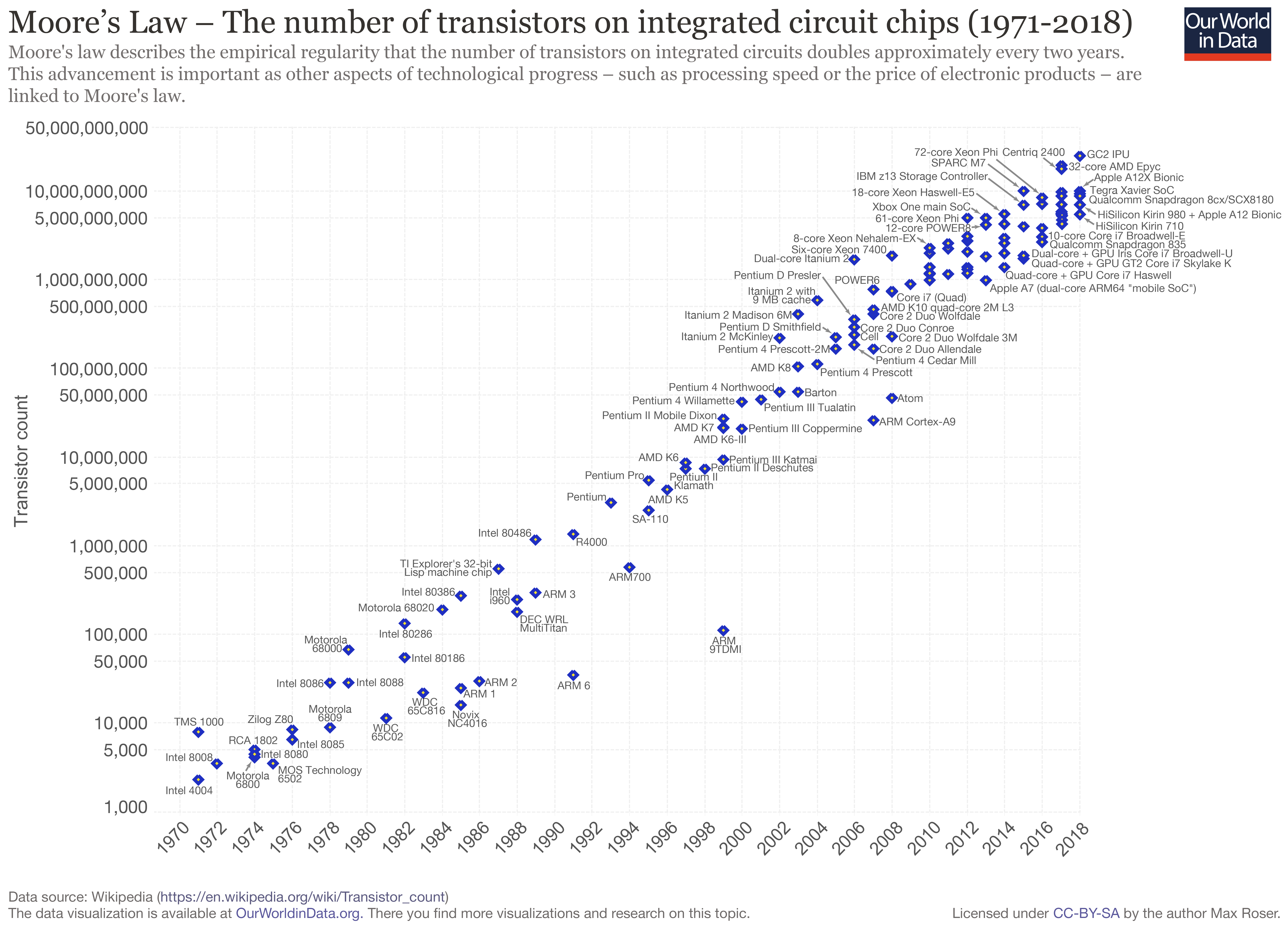

Intel sees the light and admits without explicitly saying so that some sort of multi-chip architecture like AMD's Infinity Fabric is the way to go. They will not be able to compete with AMD on price/performance until they split their large, lower yield, and therefore much more expensive monolithic CPU dies into multiple, interconnected, smaller dies.

Intel's View of the Chiplet Revolution

Ramune Nagisetty is helping Intel establish its place in a new industry ecosystem centered on chiplets

https://spectrum.ieee.org/tech-talk/semiconductors/processors/intels-view-of-the-chiplet-revolution

Intel's View of the Chiplet Revolution

Ramune Nagisetty is helping Intel establish its place in a new industry ecosystem centered on chiplets

https://spectrum.ieee.org/tech-talk/semiconductors/processors/intels-view-of-the-chiplet-revolution