Winston

Lorenzo von Matterhorn

- Joined

- Jan 31, 2009

- Messages

- 9,560

- Reaction score

- 1,749

There's only one McDonald's in Los Alamos, so I was unknowingly parked right over this tunnel on may last tourism visit there. As always, quoted text is italicized, mine isn't:

Secret Los Alamos tunnel revealed

[video=youtube;PlLvb_d8saE]https://www.youtube.com/watch?v=PlLvb_d8saE[/video]

Top-secret super-secure Los Alamos vault declassified

[video=youtube;dWA5Z32tiKM]https://www.youtube.com/watch?v=dWA5Z32tiKM[/video]

Historic Manhattan Project Sites at Los Alamos

[video=youtube;axkQ4UjTc8M]https://www.youtube.com/watch?v=axkQ4UjTc8M[/video]

Ensuring that our aging nuke stockpile continues to be potentially functional, we have the stockpile stewardship programs. From the incredibly impressive stuff seen in the videos below and considering the simulation power of modern supercomputers, I suspect they are now able or will be able to entirely simulate and optimise a nuclear warhead within a supercomputer simulation although that's not the openly stated goal of stockpile stewardship. Considering the percentage of successful tests during the 1950s with their absolutely CRUDE analysis and computing capabilities by comparison to today and their highly successful efforts using those crude tools to reduce the mass and increase the efficiency of devices even back then, I think my suspicion may be justified.

The Big Science of Stockpile Stewardship (PDF)

https://aip.scitation.org/doi/pdf/10.1063/1.5009218

Understanding how the fissile Pu pit is compressed during the primary-stage implosion is fundamental to nuclear weapons design. By simulating the pit’s compression using nonfissile Pu surrogates, researchers at LANL’s DARHT facility are able to generate a variety of two-dimensional, full-scale images that then serve to inform weapons primary design. At the Nevada Test Site, now known as the Nevada National Security Site (NNSS), primary implosions are performed with fissile Pu, but material quantities are reduced sufficiently to ensure that the assembly remains subcritical at all times, in compliance with the CTBT.

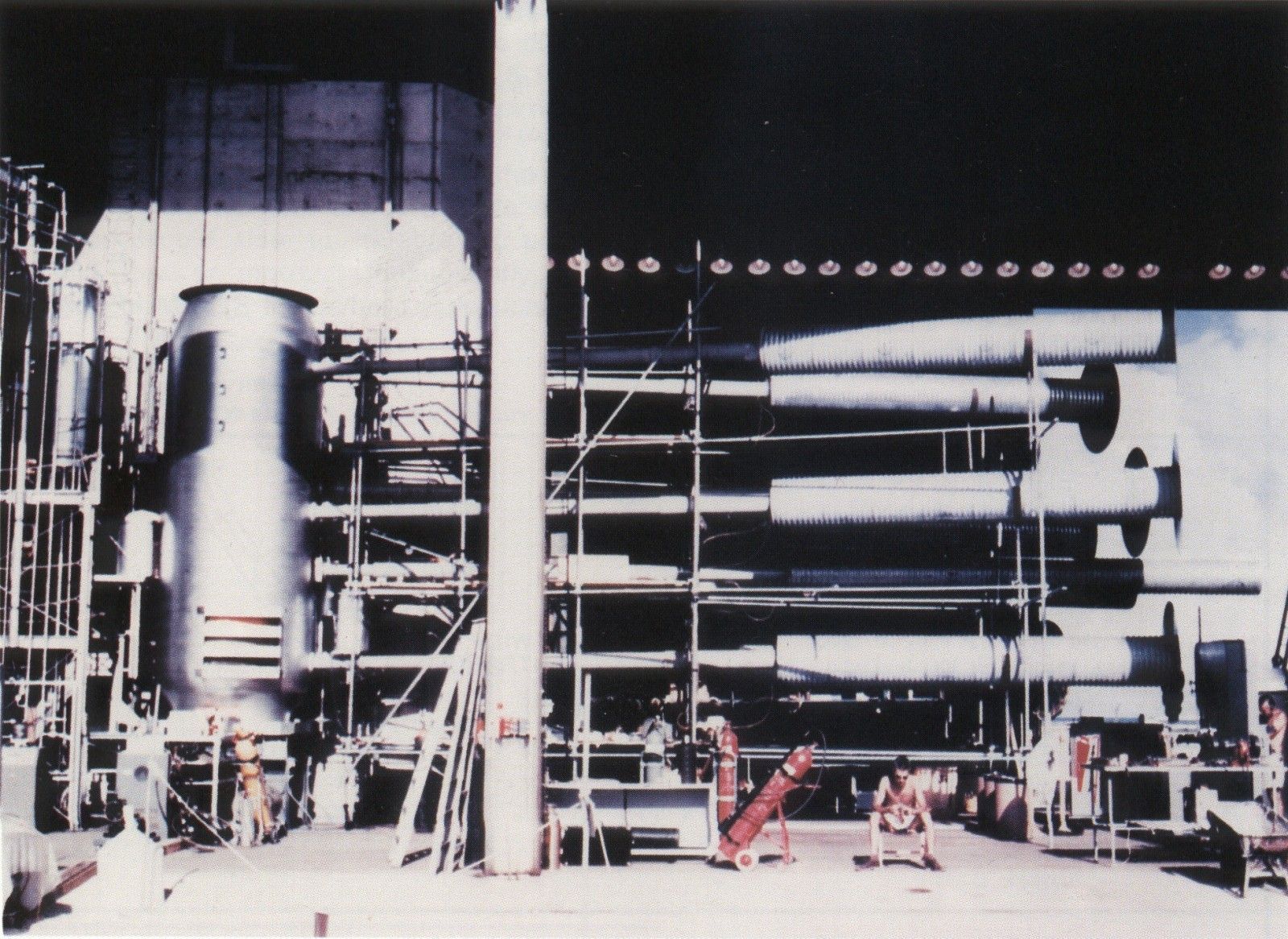

FIGURE 1. The US Department of Energy’s Stockpile Stewardship Program makes use of (clockwise from top left)

subcritical plutonium implosive testing equipment at the Nevada National Security Site, supercomputers such as

Lawrence Livermore National Laboratory’s Sequoia, electron accelerators at Los Alamos National Laboratory’s DualAxis Radiographic Hydrodynamic Test, and an inertial confinement fusion chamber at LLNL’s National Ignition

Facility. (Images courtesy of the US Department of Energy.)

Stockpile Stewardship: Los Alamos - lots of really impressive hardware shown.

[video=youtube;SdRmhrf6oXE]https://www.youtube.com/watch?v=SdRmhrf6oXE[/video]

Trinity Supercomputer Now Fully Operational

[video=youtube;z9eZs2GBn9c]https://www.youtube.com/watch?v=z9eZs2GBn9c[/video]

Stockpile Stewardship: How we ensure the nuclear deterrent without testing - Lawrence Livermore National Laboratory does it, too - more extremely impressive hardware shown.

[video=youtube;8MmujbPYT80]https://www.youtube.com/watch?v=8MmujbPYT80[/video]

Weapon Simulation and Computing Program at LLNL

https://wci.llnl.gov/about-us/weapon-simulation-and-computing

Supercomputers offer tools for nuclear testing — and solving nuclear mysteries

1 Nov 2011

https://www.washingtonpost.com/nati...ar-mysteries/2011/10/03/gIQAjnngdM_story.html

The laboratories, including Livermore in California and Los Alamos National Laboratory and Sandia National Laboratory in New Mexico, are responsible for certifying to the president the safety and reliability of the nation’s nuclear weapons under a Department of Energy program known as stockpile stewardship, run by the National Nuclear Security Administration.

Over the years, various flaws have been detected in the nuclear arsenal, some worse than others. A serious incident occurred in 2003, when traditional checks revealed a problem that, while not catastrophic, was widespread. Details of that problem are also classified. In response to the discovery, Livermore scientists performed a series of computer simulations, followed by high-explosive but nonnuclear experiments at Los Alamos, that showed the weapons did not need a major repair that might have cost billions of dollars, Goodwin said. In an earlier time, he added, the only way to reach that conclusion might have been to resume nuclear testing.

At the time the test ban treaty was defeated, critics said the United States might someday need to return to testing. Six former secretaries of defense in Republican administrations, including Caspar W. Weinberger, Richard B. Cheney and Donald H. Rumsfeld, wrote to the Senate in 1999 that the planned stockpile stewardship program “will not be mature for at least 10 years” and could only mitigate, not eliminate, a loss of confidence in weapons without testing.

Sen. Jon Kyl (R-Ariz.), who has long opposed the treaty, said: “Computer simulation is a part of the stockpile stewardship program, which scientists say has been helpful. One told me it produced good news and bad news. The good news is that it tells us a lot more about these weapons than we ever knew before. The bad news is that it tells us the weapons have bigger problems that we realized. While computers are helpful, they’re not a substitute for testing. That’s why, even though we’re not testing right now, we should not give up the legal right to test.”

Something they don't talk about much, but which is very important if one wants to design a functional and optimized nuke within a supercomputer:

Subcritical experiments

https://thebulletin.org/subcritical-experiments

https://str.llnl.gov/str/Conrad.html

[video=youtube;bGf4-ZOjyVY]https://www.youtube.com/watch?v=bGf4-ZOjyVY[/video]

Secret Los Alamos tunnel revealed

[video=youtube;PlLvb_d8saE]https://www.youtube.com/watch?v=PlLvb_d8saE[/video]

Top-secret super-secure Los Alamos vault declassified

[video=youtube;dWA5Z32tiKM]https://www.youtube.com/watch?v=dWA5Z32tiKM[/video]

Historic Manhattan Project Sites at Los Alamos

[video=youtube;axkQ4UjTc8M]https://www.youtube.com/watch?v=axkQ4UjTc8M[/video]

Ensuring that our aging nuke stockpile continues to be potentially functional, we have the stockpile stewardship programs. From the incredibly impressive stuff seen in the videos below and considering the simulation power of modern supercomputers, I suspect they are now able or will be able to entirely simulate and optimise a nuclear warhead within a supercomputer simulation although that's not the openly stated goal of stockpile stewardship. Considering the percentage of successful tests during the 1950s with their absolutely CRUDE analysis and computing capabilities by comparison to today and their highly successful efforts using those crude tools to reduce the mass and increase the efficiency of devices even back then, I think my suspicion may be justified.

The Big Science of Stockpile Stewardship (PDF)

https://aip.scitation.org/doi/pdf/10.1063/1.5009218

Understanding how the fissile Pu pit is compressed during the primary-stage implosion is fundamental to nuclear weapons design. By simulating the pit’s compression using nonfissile Pu surrogates, researchers at LANL’s DARHT facility are able to generate a variety of two-dimensional, full-scale images that then serve to inform weapons primary design. At the Nevada Test Site, now known as the Nevada National Security Site (NNSS), primary implosions are performed with fissile Pu, but material quantities are reduced sufficiently to ensure that the assembly remains subcritical at all times, in compliance with the CTBT.

FIGURE 1. The US Department of Energy’s Stockpile Stewardship Program makes use of (clockwise from top left)

subcritical plutonium implosive testing equipment at the Nevada National Security Site, supercomputers such as

Lawrence Livermore National Laboratory’s Sequoia, electron accelerators at Los Alamos National Laboratory’s DualAxis Radiographic Hydrodynamic Test, and an inertial confinement fusion chamber at LLNL’s National Ignition

Facility. (Images courtesy of the US Department of Energy.)

Stockpile Stewardship: Los Alamos - lots of really impressive hardware shown.

[video=youtube;SdRmhrf6oXE]https://www.youtube.com/watch?v=SdRmhrf6oXE[/video]

Trinity Supercomputer Now Fully Operational

[video=youtube;z9eZs2GBn9c]https://www.youtube.com/watch?v=z9eZs2GBn9c[/video]

Stockpile Stewardship: How we ensure the nuclear deterrent without testing - Lawrence Livermore National Laboratory does it, too - more extremely impressive hardware shown.

[video=youtube;8MmujbPYT80]https://www.youtube.com/watch?v=8MmujbPYT80[/video]

Weapon Simulation and Computing Program at LLNL

https://wci.llnl.gov/about-us/weapon-simulation-and-computing

Supercomputers offer tools for nuclear testing — and solving nuclear mysteries

1 Nov 2011

https://www.washingtonpost.com/nati...ar-mysteries/2011/10/03/gIQAjnngdM_story.html

The laboratories, including Livermore in California and Los Alamos National Laboratory and Sandia National Laboratory in New Mexico, are responsible for certifying to the president the safety and reliability of the nation’s nuclear weapons under a Department of Energy program known as stockpile stewardship, run by the National Nuclear Security Administration.

Over the years, various flaws have been detected in the nuclear arsenal, some worse than others. A serious incident occurred in 2003, when traditional checks revealed a problem that, while not catastrophic, was widespread. Details of that problem are also classified. In response to the discovery, Livermore scientists performed a series of computer simulations, followed by high-explosive but nonnuclear experiments at Los Alamos, that showed the weapons did not need a major repair that might have cost billions of dollars, Goodwin said. In an earlier time, he added, the only way to reach that conclusion might have been to resume nuclear testing.

At the time the test ban treaty was defeated, critics said the United States might someday need to return to testing. Six former secretaries of defense in Republican administrations, including Caspar W. Weinberger, Richard B. Cheney and Donald H. Rumsfeld, wrote to the Senate in 1999 that the planned stockpile stewardship program “will not be mature for at least 10 years” and could only mitigate, not eliminate, a loss of confidence in weapons without testing.

Sen. Jon Kyl (R-Ariz.), who has long opposed the treaty, said: “Computer simulation is a part of the stockpile stewardship program, which scientists say has been helpful. One told me it produced good news and bad news. The good news is that it tells us a lot more about these weapons than we ever knew before. The bad news is that it tells us the weapons have bigger problems that we realized. While computers are helpful, they’re not a substitute for testing. That’s why, even though we’re not testing right now, we should not give up the legal right to test.”

Something they don't talk about much, but which is very important if one wants to design a functional and optimized nuke within a supercomputer:

Subcritical experiments

https://thebulletin.org/subcritical-experiments

https://str.llnl.gov/str/Conrad.html

[video=youtube;bGf4-ZOjyVY]https://www.youtube.com/watch?v=bGf4-ZOjyVY[/video]