Winston

Lorenzo von Matterhorn

- Joined

- Jan 31, 2009

- Messages

- 9,560

- Reaction score

- 1,748

RESTORING THE HEATHKIT ES-400 COMPUTER

https://www.nutsvolts.com/magazine/article/restoring-the-heathkit-es-400-computer

Excerpts:

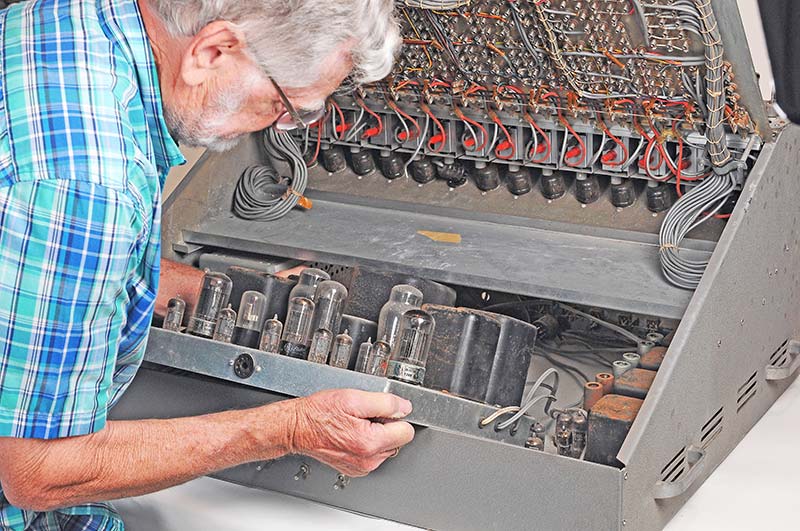

When I first got into electronics in the mid-1950s, I would pour over the Heathkit catalogs and dream about owning a 5” scope. When they came out with the ES-400 Electronic Analog Computer with its 45 vacuum tubes across the top, I wanted one in the worst way. It looked so cool (Figure 1)! And it was only $945. Gulp!

Fast forward six decades. Today, I finally have one in my shop on my bench completely restored! Figure 2 is the proof. It took me eight months of part-time work to restore all 168 pounds. In this article, I would like to share a few of the trials and tribulations I went through to resurrect this beast.

Several years ago, I restored Heathkit’s smaller analog computer, the EC-1. You may have read about it in the May 2016 issue of Nuts & Volts. Afterwards, I vowed to find and restore my dream computer: the ES-400. Amazingly, a nice gentleman in Northern California heard about my quest and just happened to have one in his storage locker. He said I was welcome to it for free if I would give it a good home.

SPECIFICATIONS

The “Big Kahuna” had 25 major assemblies containing a total of 73 vacuum tubes and the following specifications:

Model: ES-400 (sometimes called H-1)

Original Price: $945 (equivalent 2019 dollars = $8,175)

Time Period Sold: 1956-1962

Quantity Sold: Approximately 250-400

Weight: 168 pounds

Dissipation: 450 watts

Assemblies:

(1) ES-2 Amplifier Power Supply (regulated ±250V at 250 mA, -450V at 50 mA, 6.3VAC at 14.5A)

(1) ES-50 Reference Power Supply (regulated ±100V)

(3) ES-100 Initial Condition Power Supply (2x floating 100V, in each supply)

(1) ES-151 Relay Power Supply (2x 50V)

(15) ES-201 Operational Amplifier (gain = 50,000, output range ±100V at 10 mA)

(1) ES-400 Cabinet and Front Panel (364 jacks, 52 switches, 38 pots, 14 connectors, one meter)

(1) ES-505 Repetitive Oscillator (0.6-6 Hz)

(1) Sola Voltage Regulator Transformer (250 VA)

(1) ES-600 Function Generator (optional)

His restoration wasn't cheap. A few examples from the article:

Problem: Unfortunately, the “free” computer did not include any of the 45 tubes for the 15 op-amps across the top of the cabinet. The 6BQ7As and 6BH6s were pretty reasonable, but the (15) 12AX7s were another story. Some eBay prices were pretty outrageous; $499.99 for a matched pair!!

Solution: Luckily, Chinese 12AX7A tubes were available from TubesAndMore.com for $9.59 each and they worked fine. Refer to Figure 14.

Problem: All the nuts and bolts were corroded, as were many other electrical components.

Solution: I replaced every nut, bolt, screw, and washer in the entire machine, and also 51 pots, 64 tube sockets, eight toggle switches, 15 octal plugs, and eight power cords. Plus, the countless resistors and capacitors. Whew!

Just some of the photos from the article:

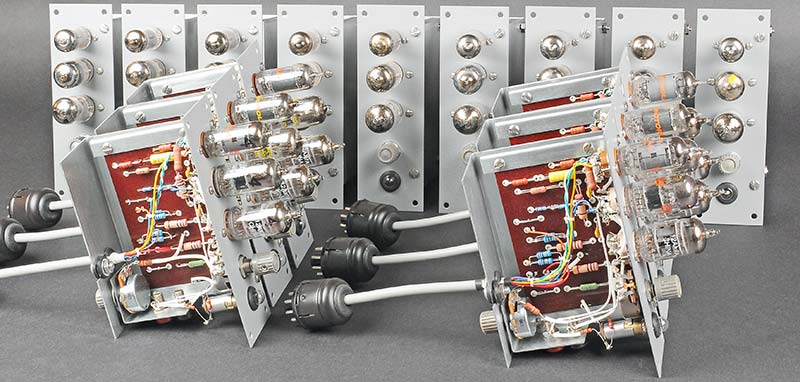

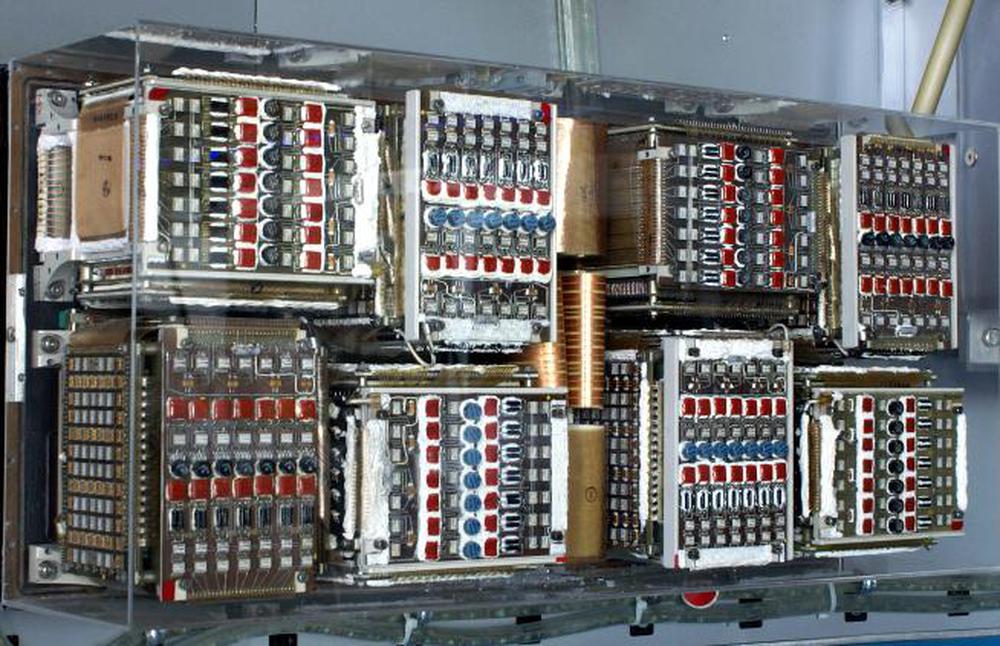

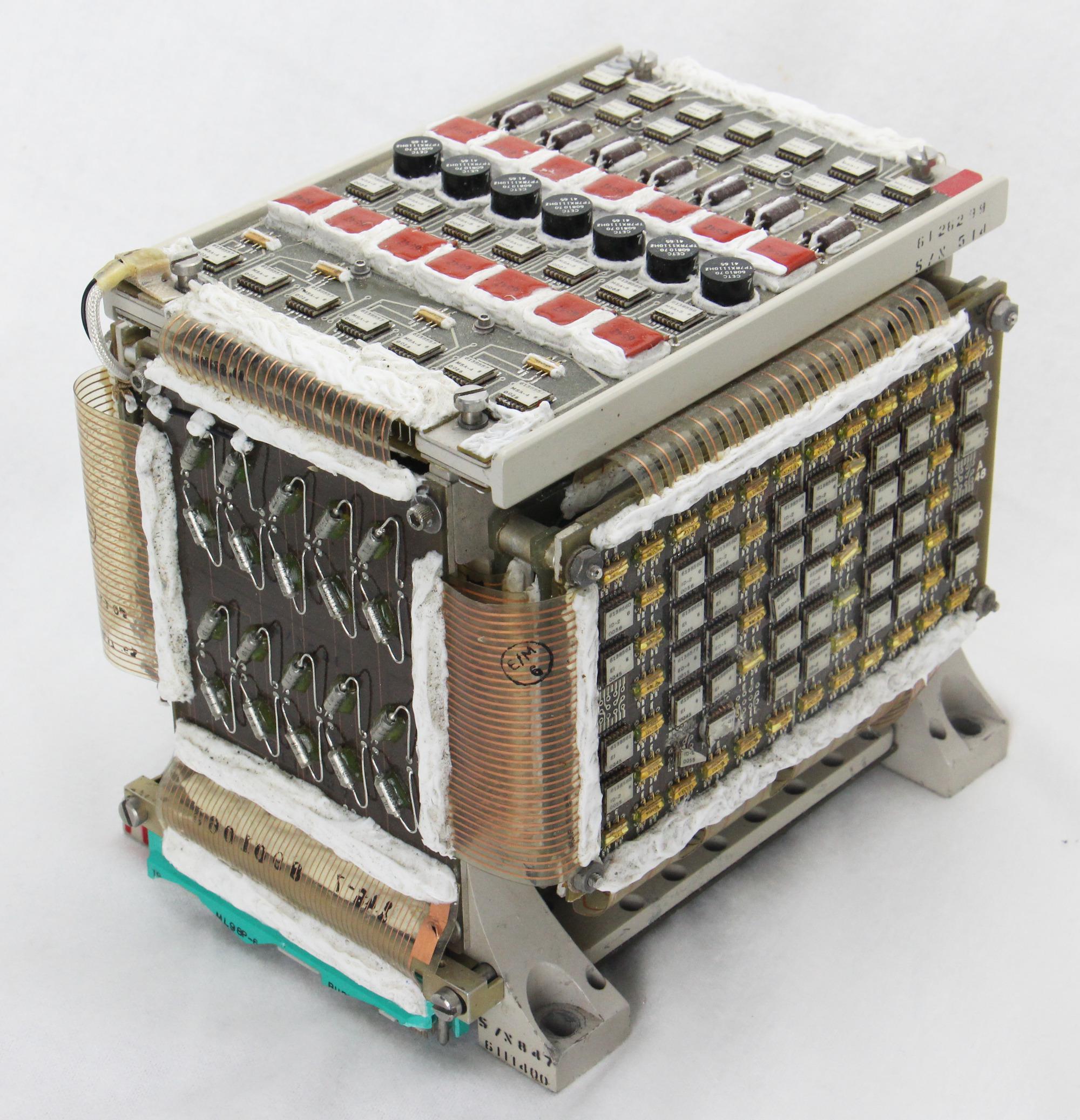

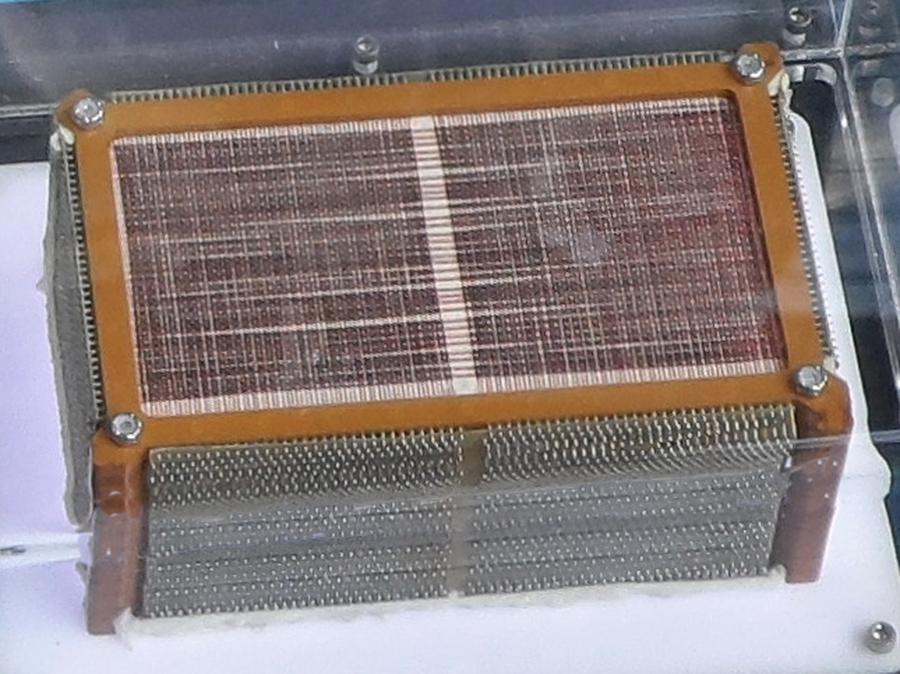

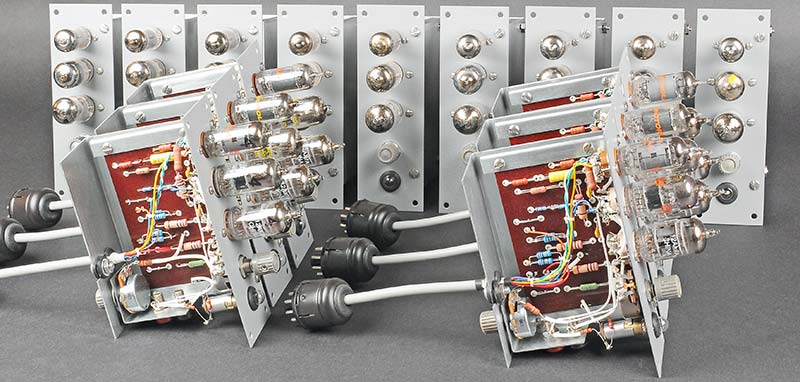

Before:

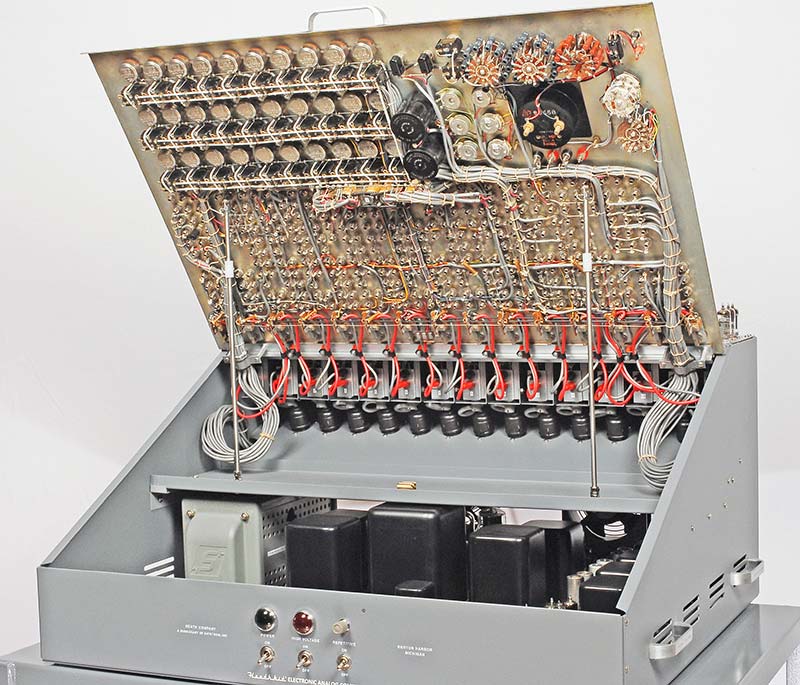

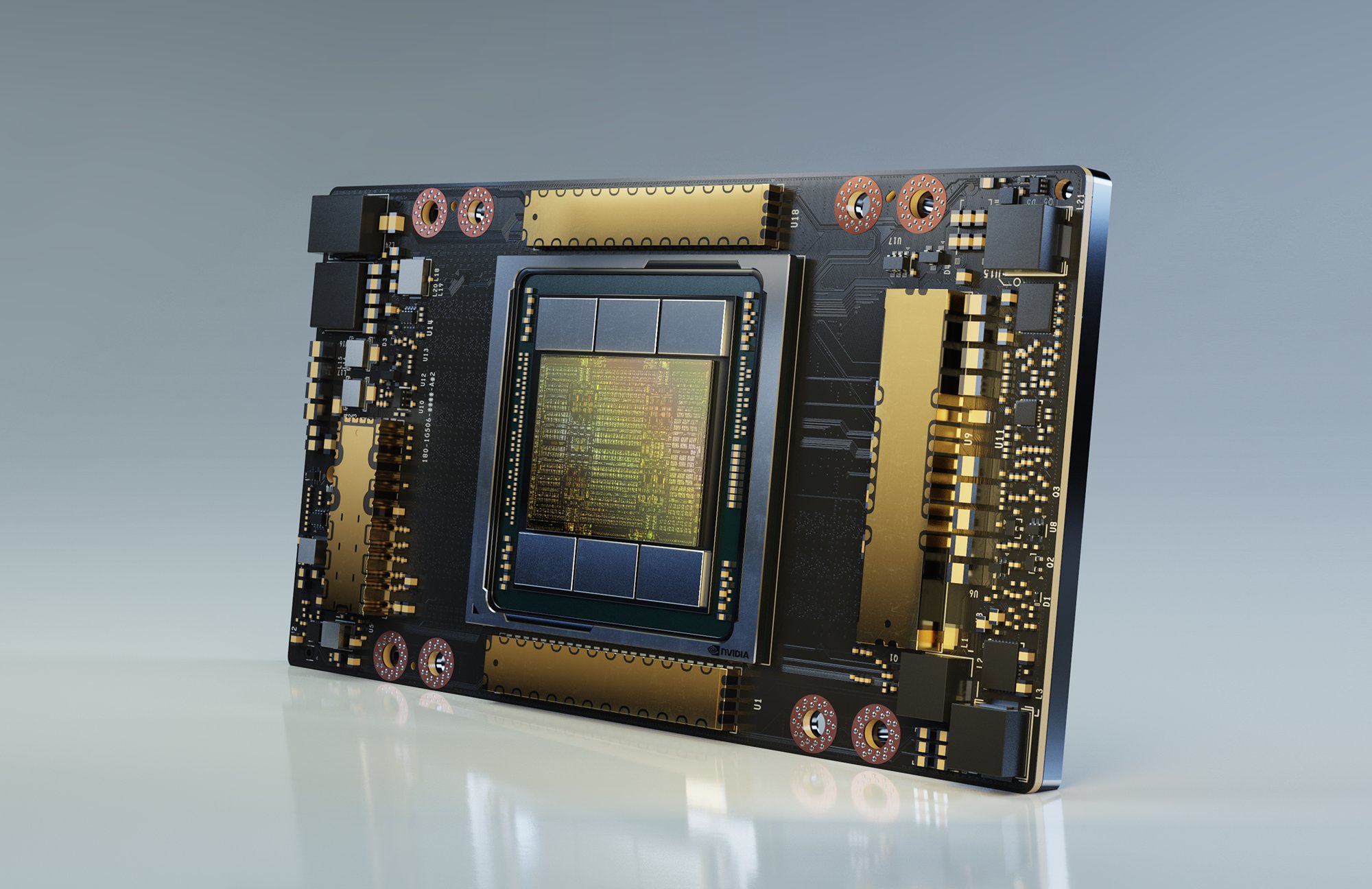

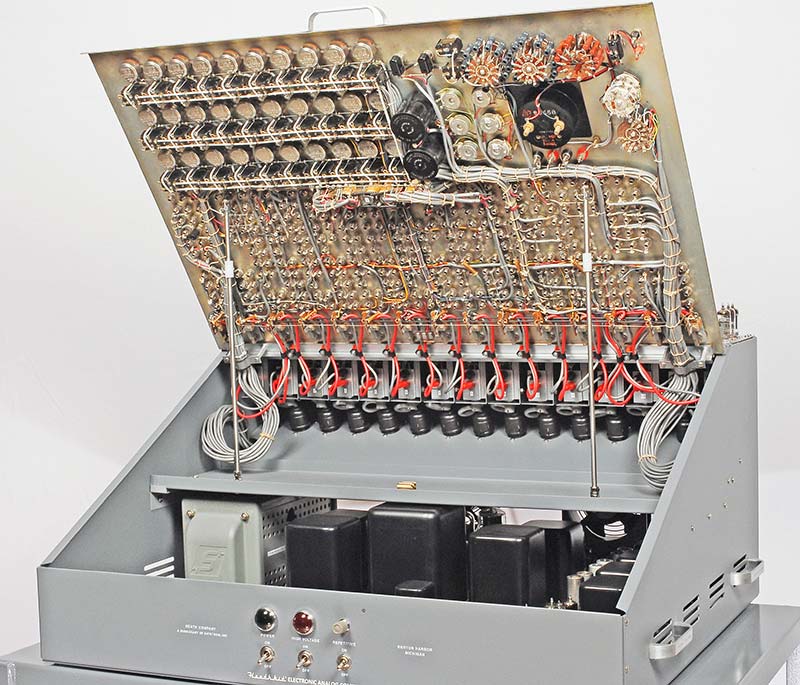

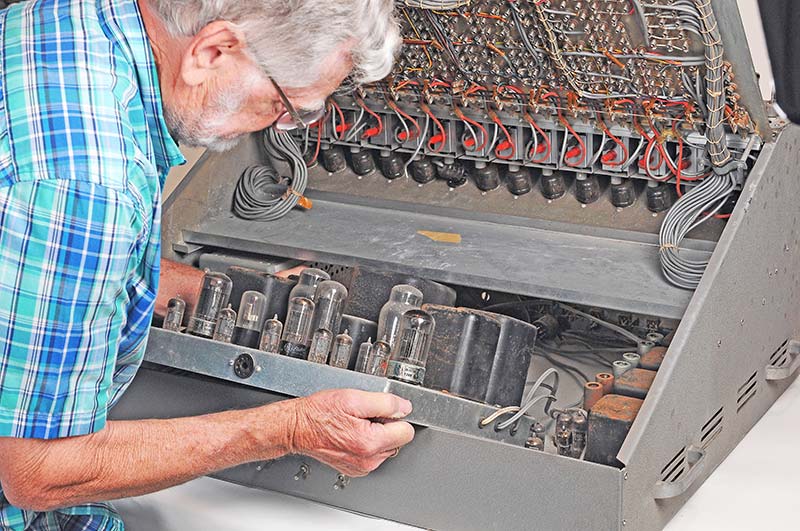

After. Beautiful work:

https://www.nutsvolts.com/magazine/article/restoring-the-heathkit-es-400-computer

Excerpts:

When I first got into electronics in the mid-1950s, I would pour over the Heathkit catalogs and dream about owning a 5” scope. When they came out with the ES-400 Electronic Analog Computer with its 45 vacuum tubes across the top, I wanted one in the worst way. It looked so cool (Figure 1)! And it was only $945. Gulp!

Fast forward six decades. Today, I finally have one in my shop on my bench completely restored! Figure 2 is the proof. It took me eight months of part-time work to restore all 168 pounds. In this article, I would like to share a few of the trials and tribulations I went through to resurrect this beast.

Several years ago, I restored Heathkit’s smaller analog computer, the EC-1. You may have read about it in the May 2016 issue of Nuts & Volts. Afterwards, I vowed to find and restore my dream computer: the ES-400. Amazingly, a nice gentleman in Northern California heard about my quest and just happened to have one in his storage locker. He said I was welcome to it for free if I would give it a good home.

SPECIFICATIONS

The “Big Kahuna” had 25 major assemblies containing a total of 73 vacuum tubes and the following specifications:

Model: ES-400 (sometimes called H-1)

Original Price: $945 (equivalent 2019 dollars = $8,175)

Time Period Sold: 1956-1962

Quantity Sold: Approximately 250-400

Weight: 168 pounds

Dissipation: 450 watts

Assemblies:

(1) ES-2 Amplifier Power Supply (regulated ±250V at 250 mA, -450V at 50 mA, 6.3VAC at 14.5A)

(1) ES-50 Reference Power Supply (regulated ±100V)

(3) ES-100 Initial Condition Power Supply (2x floating 100V, in each supply)

(1) ES-151 Relay Power Supply (2x 50V)

(15) ES-201 Operational Amplifier (gain = 50,000, output range ±100V at 10 mA)

(1) ES-400 Cabinet and Front Panel (364 jacks, 52 switches, 38 pots, 14 connectors, one meter)

(1) ES-505 Repetitive Oscillator (0.6-6 Hz)

(1) Sola Voltage Regulator Transformer (250 VA)

(1) ES-600 Function Generator (optional)

His restoration wasn't cheap. A few examples from the article:

Problem: Unfortunately, the “free” computer did not include any of the 45 tubes for the 15 op-amps across the top of the cabinet. The 6BQ7As and 6BH6s were pretty reasonable, but the (15) 12AX7s were another story. Some eBay prices were pretty outrageous; $499.99 for a matched pair!!

Solution: Luckily, Chinese 12AX7A tubes were available from TubesAndMore.com for $9.59 each and they worked fine. Refer to Figure 14.

Problem: All the nuts and bolts were corroded, as were many other electrical components.

Solution: I replaced every nut, bolt, screw, and washer in the entire machine, and also 51 pots, 64 tube sockets, eight toggle switches, 15 octal plugs, and eight power cords. Plus, the countless resistors and capacitors. Whew!

Just some of the photos from the article:

Before:

After. Beautiful work: